Gravitational Attraction

What would happen if two people out in space a few meters apart, abandoned by their spacecraft, decided to wait until gravity pulled them together? My initial thought was that …

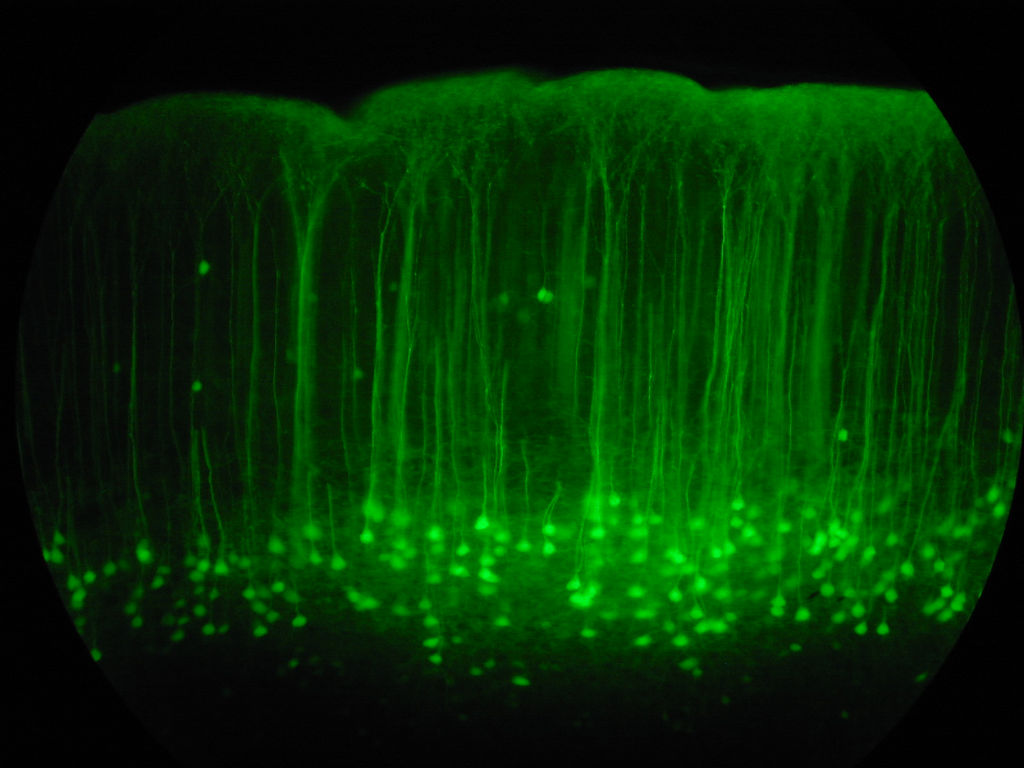

Simulate biologically derived neural networks of medium complexity - both rate- and spike-based models. Explore the dynamics of synaptic plasticity under different input environments, including low-dimensional vector environments and natural image inputs.

plasticnet + splikes: synaptic modification in rate and spike-based neurons

This project aims at providing a simple-to-use neural simulator at a medium-level of complexity. This package combines both rate and spike-based models.

Theory of Cortical Plasticity by Leon N. Cooper, Brian S. Blais, Harel Z. Shouval, Nathan Intrator

Is theory possible in neuroscience? Not only possible, in our opinion, necessary. For a system as complex as the brain it is obvious that we cannot just make observations. (The number of possible observations is substantially larger than the available number of scientist-hours, even projecting several centuries into the future.) Without a theoretical structure to connect diverse observations with one another, the result would be a listing of facts of little use in understanding what the brain is about.In the work that follows, we present the Bienenstock, Cooper and Munro (BCM) theory of synaptic plasticity. The theory is sufficiently concrete so that it can be and, as is discussed below, has been compared with experiment.

This is a collection of natural images used in my research. It contains the original images, scripts for processing the images, as well as scripts to download some of the standard databases, such as the Olshausen and VanHateren image databases.

Blais, B.S. Feb 2013. From Theory to Experiment and Back Again: A Physicists Journey Through Neuroscience . University of Rhode Island Neuroscience Colloquium. (a keynote version, which includes the animations, is here

A Hierarchical Spatiotemporal Model of Neocortex With Probabilistic Feedback. (Twelfth International Conference on Cognitive and Neural Systems (ICCNS), May 2008)

Notes on Inhibition and BCM . (IBNS Research Meeting, April 2008)

Blais, B. S. 2014. Receptive Field modeling in Encyclopedia of Computational Neuroscience, edited by D. Jaeger and R. Jung (Springer New York, 2014) pp. 1-6.

Blais, B.S, Cooper, L.N , and Shouval H.Z. 2008. Effect of correlated lateral geniculate nucleus firing rates on predictions for monocular eye closure versus monocular retinal inactivation Physical Review E 80 (6): 061915.

Monocular deprivation experiments can be used to distinguish between different ideas concerning properties of cortical synaptic plasticity. Monocular deprivation by lid suture causes a rapid disconnection of the deprived eye connected to cortical neurons whereas total inactivation of the deprived eye produces much less of an ocular dominance shift. In order to understand these results one needs to know how lid suture and retinal inactivation affect neurons in the lateral geniculate nucleus (LGN) that provide the cortical input. Recent experimental results by Linden et al. showed that monocular lid suture and monocular inactivation do not change the mean firing rates of LGN neurons but that lid suture reduces correlations between adjacent neurons whereas monocular inactivation leads to correlated firing. These, somewhat surprising, results contradict assumptions that have been made to explain the outcomes of different monocular deprivation protocols. Based on these experimental results we modify our assumptions about inputs to cortex during different deprivation protocols and show their implications when combined with different cortical plasticity rules. Using theoretical analysis, random matrix theory and simulations we show that high levels of correlations reduce the ocular dominance shift in learning rules that depend on homosynaptic depression (i.e., Bienenstock-Cooper-Munro type rules), consistent with experimental results, but have the opposite effect in rules that depend on heterosynaptic depression (i.e., Hebbian/principal component analysis type rules).

Blais, B.S, Frenkel M. , Kuindersma S., Muhammad R., Shouval H.Z, Cooper, L.N , and Bear M. F. 2008 Recovery from monocular deprivation using binocular deprivation: Experimental observations and theoretical analysis . Journal of Neurophysiology 100:2217-2224.

Ocular dominance (OD) plasticity is a robust paradigm for examining the functional consequences of synaptic plasticity. Previous experimental and theoretical results have shown that OD plasticity can be accounted for by known synaptic plasticity mechanisms, using the assumption that deprivation by lid suture eliminates spatial structure in the deprived channel. Here we show that in the mouse, recovery from monocular lid suture can be obtained by subsequent binocular lid suture but not by dark rearing. This poses a significant challenge to previous theoretical results. We therefore performed simulations with a natural input environment appropriate for mouse visual cortex. In contrast to previous work we assume that lid suture causes degradation but not elimination of spatial structure, whereas dark rearing produces elimination of spatial structure. We present experimental evidence that supports this assumption, measuring responses through sutured lids in the mouse. The change in assumptions about the input environment is sufficient to account for new experimental observations, while still accounting for previous experimental results.

BCM (Bienenstock et al., 1982) refers to the theory of synaptic modification first proposed by Elie Bienenstock, Leon Cooper, and Paul Munro in 1982 to account for experiments measuring the selectivity of neurons in primary sensory [8]cortex and its dependency on neuronal input. It is characterized by a rule expressing synaptic change as a Hebb-like product of the presynaptic activity and a nonlinear function, \phi(y;\theta_M) , of postsynatic activity, y. For low values of the postsynaptic activity ( y<\theta_M ), \phi is negative; for y>\theta_M , \phi is positive. The rule is stabilized by allowing the modification threshold, \theta_M , to vary as a super-linear function of the previous activity of the cell. Unlike traditional methods of stabilizing Hebbian learning, this "sliding threshold" provides a mechanism for incoming patterns, as opposed to converging afferents, to compete. A detailed exploration can be found in the book Theory of Cortical Plasticity (Cooper et al., 2004). For an open-source implementation of the BCM, amongst other synaptic modification rules, see the Plasticity package1(http://plasticity.googlecode.com/) .

Shouval, H.Z, Gavornik, J. P., Shuler, M., Bear, M. F., and Blais, B.S. 2007. Learning Reward Timing using Reinforced Expression of Synaptic Plasticity In Collaborative Research in Computational Neuroscience (CRCNS) Conference.

Our collaborative research proposes to study the synaptic and cellular basis of receptive field plasticity in visual cortex. Recently some of our efforts have concentrated on accounting for novel aspects of cellular responses and plasticity observed in the visual cortex of the rat. In recently published findings we provided evidence that pairing visual cues with subsequent rewards in awake behaving animals results in the emergence, in the primary visual cortex (V1), of reward-timing activity (Shuler and Bear, 2006). Further, the properties of reward-timing activity suggest that it is generated locally within V1, implying that V1 is privy to a signal relating the acquisition of reward. We provide here a model demonstrating how such interval timing of reward could emerge in V1. A fundamental assumption of this work is that the timing characteristics of the V1 network are encoded in the lateral connectivity within the network, consequently the plasticity assumed in this model is of these recurrent connections. Using this assumption, no prior stimulus-locked temporal representation is necessary. The plasticity of recurrent connections is implemented through an interaction between an activity dependent Hebbian like plasticity, and a neuromodulatory signal signifying reward. It is demonstrated that such a global reinforcement signal is sufficient for interval time learning by stabilizing changes in nascent synaptic efficacy resultant from prior visually-evoked activity. By modifying synaptic weight change within the recurrent network of V1, our model transforms temporally restricted visual events into neurally persistent activity relating to their associated reward timing expectancy.

Shouval, H.Z, Bear, M.F., and Blais, B.S. 2006. The cellular basis of receptive field plasticity in visual cortex, an integrative experimental and theoretical approach . In Collaborative Research in Computational Neuroscience (CRCNS) Conference.

Synaptic plasticity is a likely basis for information storage by the neocortex. Understanding cortical plasticity requires coordinated investigation of both underlying cellular mechanisms and their systems-level consequences in the same model system. However, establishing connections between the cellular and system levels of description is non-trivial. A major contribution of theoretical neuroscience is that it can link different levels of description, and in doing so can direct experiments to the questions of greatest relevance. The objective of the current project is to generate a theoretical description of experience-dependent plasticity in the rodent visual system. The advantages of rodents are, first, that knowledge of the molecular mechanisms of synaptic plasticity is relatively mature and continues to be advanced with genetic and pharmacological experiments, and second, rodents show robust receptive field plasticity in visual cortex (VC) that can be easily and inexpensively monitored with chronic recording methods. The project aims are threefold. First, the activity of inputs to rat visual cortex will be recorded in different viewing conditions that induce receptive field (RF) plasticity, and these data will be integrated into formal models of synaptic plasticity. Second, the dynamics of RF plasticity will be simulated using existing spike rate-based algorithms and compared will experimental observations. Third, the consequences of new biophysically plausible plasticity algorithms, based on spike timing and metaplasticity, will be analyzed and compared with experiments.6

Blais, B.S. and Kuindersma, S. 2005. Synaptic Modification in Spiking-Rate Models: A Comparison between Learning in Spiking Neurons and Rate-Based Neuron Models In Society For Neuroscience Conference Abstracts

Rate-based neuron models have been successful in understanding many aspects of development such as the development of orientation selectivity(Bienenstock et al., 1982; Oja, 1982; Linsker, 1986; Miller, 1992; Bell and Sejnowski, 1997), the particular dynamics of visual deprivation(Blais et al., 1999) and the development of direction selectivity(Wimbauer et al., 1997; Blais et al., 2000). These models do not address phenomena such as temporal coding, spike-timing dependant synaptic plasticity, or any short-time behavior of neurons. More detailed spiking models (Song et.al, 2000; Shouval et.al. 2002; Yeung et.al. 2004) address these issues, and have had some success, but have failed to develop receptive fields in natural environments. These more detailed models are diffiult to explore, given their large number of parameters and the run-time computational limitations. In addition, their results are often diffiult to compare directly with the rate-based models.

We propose a model, which we call a spiking-rate model, which can serve as a middle-ground between the over simplistic rate-based models, and the more detailed spiking models. The spiking-rate model is a spiking model where all of the underlying processes are continuous Poisson, the summation of inputs is entirely linear (although non-linearities can be added), and the generation of outputs is done by calculating a rate output and then generating an appropriate Poisson spike train. In this way, the limiting behavior is identical to a rate-based model, but the proper ties of spiking models can be incorporated more easily. We present the development of receptive i!elds with this model in various visual environments. We then present the necessary conditions for the receptive field development in the spiking-rate models, and make comparisons to detailed spiking models, in order to more clearly understand the necessary conditions for receptive field development

Yeung, L.C., Shouval, H.Z, Blais, B.S., Cooper, L. N. 2004. Synaptic Homeostasis and Input Selectivity Follow From a Calcium-Dependent Plasticity Model . Proceedings of the National Academy of Science. Vol 101, Issue 41. 14943-14948.

Modifications in the strengths of synapses are thought to underlie memory, learning, and development of cortical circuits. Many cellular mechanisms of synaptic plasticity have been investigated in which differential elevations of postsynaptic calcium concentrations play a key role in determining the direction and magnitude of synaptic changes. We have previously described a model of plasticity that uses calcium currents mediated by N-methyl-D-aspartate receptors as the associative signal for Hebbian learning. However, this model is not completely stable. Here, we propose a mechanism of stabilization through homeostatic regulation of intracellular calcium levels. With this model, synapses are stable and exhibit properties such as those observed in metaplasticity and synaptic scaling. In addition, the model displays synaptic competition, allowing structures to emerge in the synaptic space that reflect the statistical properties of the inputs. Therefore, the combination of a fast calcium-dependent learning and a slow stabilization mechanism can account for both the formation of selective receptive fields and the maintenance of neural circuits in a state of equilibrium.

Cooper, L. N, Intrator, N., Blais, B.S., Shouval, H. Z. 2004. Theory of Cortical Plasticity . World Scientific Publishing.

This invaluable book presents a theory of cortical plasticity and shows how this theory leads to experiments that test both its assumptions and consequences. It ellucidates, in a manner that is accessible to students as well as researchers, the role which the BCM theory has played in guiding research and suggesting experiments that have led to our present understanding of the mechanisms underlying cortical plasticity. Most of the connections betwen theory and experiment that are discussed require complex simulations. A unique feature of the book is the accompanying software package, Plasticity . This is provided complete with source code, and enables the reader to repeat any of the simulations quoted in the book as well as to vary either parameters or assumptions. Plasticity is thus a research and an educational tool. Readers can use it to obtain hands-on knowledge of the structure of BCM and various other learning algorithms. They can check and replicate our results as well as test algorithms and refinements of their own.