Gravitational Attraction

What would happen if two people out in space a few meters apart, abandoned by their spacecraft, decided to wait until gravity pulled them together? My initial thought was that …

In #religion

In a previous post I examined a simple model of the interaction of testimony with scientific inquiry, and how it can affect the probabilities of the truth of miracle claims. In this post I examine a single result from Timothy and Lydia McGrews' article in The Blackwell Companion to Natural Theology entitled "Chapter 11 - The Argument from Miracles: A Cumulative Case for the Resurrection of Jesus of Nazareth". This paper is covered both in this YouTube episode, Bad Apologetics Ep 18 - Bayes Machine goes BRRRRRRRRR with Nathan Ormond, Kamil Gregor, and James Fodor, and summarized in text form on my blog starting here.

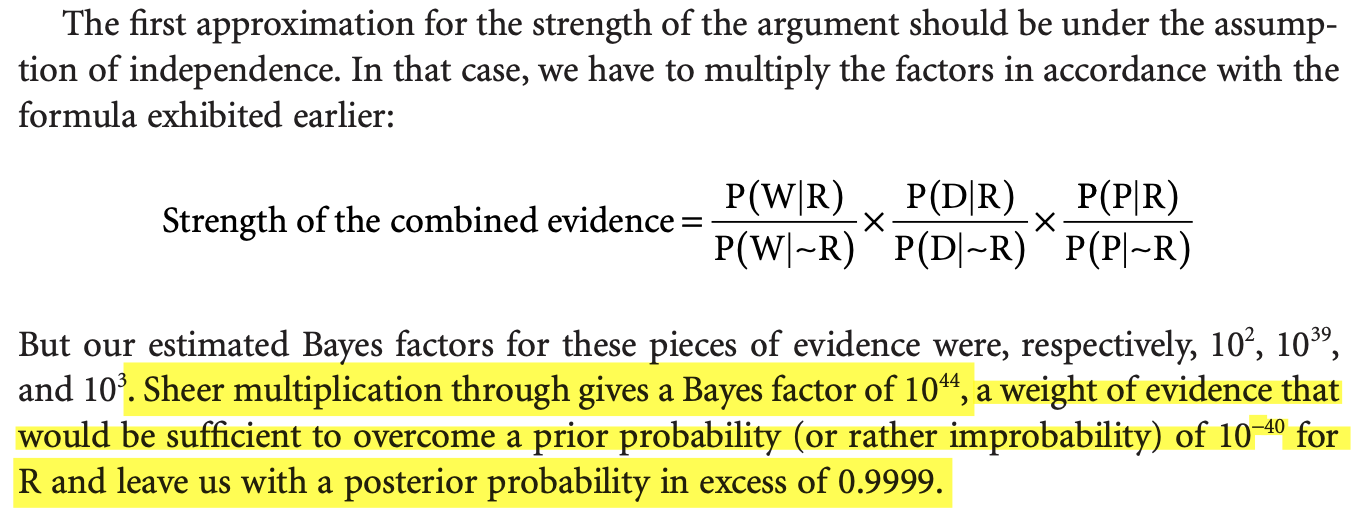

The result I want to look at is here:

The basic idea the McGrews have is that there are \(N=15\) data points each with a Bayes factor of 1000, each completely independent from the others, leading to the product of probabilities yielding Bayes factor of around \(1000^{15}=10^{45}\) (one of their Bayes factors is only 100 so they get \(10^{44}\), but the idea is the same). I'll use \(10^{45}\) in this post for mathematical simplicity below. We discuss in the video above how ridiculous \(10^{44}\) is as a Bayes factor -- that there may not even be modern physical theories with Bayes factors that high. However, in this post, I want to take a direction that I haven't seen before but I think is enlightening.

TLDR: One can be supremely confident that all 15 sources are statistically independent, at probability of \(p=0.9995\) (which is far higher than many scientific claims in published journals), and still not be able to justify the miracle claim due to the small uncertainty. This fact alone should disqualify the result the McGrews present.

One of the properties of all inference, particularly historical inference, is that there is some level of uncertainty. Do we know that, say, two claims in an ancient document are statistically independent? That's very hard to establish. The typical approach is to argue that the claims are textually independent and thus more likely to be statistically independent. This is a loose inference, because it is very easy for two texts to draw from the same oral tradition and thus not represent independent information. It's even hard in modern contexts to establish statistical independence between people making claims, even when you can interrogate the people and investigate the claims directly -- neither of which we can do for ancient texts.

So, that being said, it seems plausible that there is some degree of uncertainty in even establishing the independence of data sources. I'd like to explore how much uncertainty the McGrews calculation can tolerate and still maintain their argument. It is a sign of the strength of an argument if it can withstand some reasonable level of uncertainty.

I use the same notation as I did in this post on testimony.

We're interested in \(P(M|D_1, D_2, \ldots, D_n)\), but to establish a couple more definitions, let's look at the two-data case.

In the two data case we have

We define the likelihood term for a single data point as,

The McGrews do not specify these probabilities numerically, but only specify their ratio, which is the single-point Bayes Factor they (arbitrarily) assume,

They also assume independence of all the sources, which would imply,

The odds ratio then becomes,

Following the same procedure for \(n\) points, we have,

for the McGrews, \(N=15\) and \(d/b=1000\) and so they claim that the prior odds, \(\frac{P(M)}{P(\bar{M})}\) needs to be less than \(10^{-45}\) to overcome and they can't imagine that happening except for an overly skeptical person.

Now what happens if the data are not independent or, if you prefer to avoid the double-negative, if the data are dependent? Then the probability of the second data point will be different, but how?

Imagine someone observes a fair coin that has been flipped and is lying on the table. They write down the result and leave the room. They then come back in, observe the coin and write the result. If during their absence,

Since we can have any possibility in between, we need to know the process of the dependence to determine at a minimum whether the value even goes up or down. In the case of testimony, it is far more likely for new testimony that is dependent on old testimony to give the same or similar information as the old. It would be odd indeed (even though the McGrews argue for this) for the new testimony to tend to be the opposite of the old. The consequences of this odd view is explored in the video and also in the summary here so I won't go into it in this post.

In the case of \(D_2\) being completely dependent on \(D_1\), it is the case that \(D_2\) adds no new information. Or in other words, knowing \(D_1\) it follows that \(D_2\) is true necessarily,

In the \(n\)-data point case, this becomes,

Using the McGrews numbers here, we get

which means that the prior odds for a miracle need only be more then 1 in 1000 against to overcome this evidence -- not a very strong case for the miracle. Clearly dependent sources undermine the McGrews' case. But how much dependency is needed?

We'll start with the following simplified model of the uncertainty in the establishment of independence. For each data point after the first there is some probability that the data point is independent of the first. We'll call that value \(\beta\). For intuition, note that

Later we generalize this calculation to the case of partial dependency, but the conclusion is identical to this simpler model which is a little more intuitive.

For the 2-data point case we have,

where the likelihood term for the second data point, \(P(D_2|M,D_1)\) is broken up into two pieces -- a value of \(d\) for the independent case with probability \(\beta\) and a value of 1 for the dependent case with probability \(1-\beta\). Generalizing this to the \(n\)-data point case,

To reproduce the result of the McGrews, we have the following:

From the equation above we get

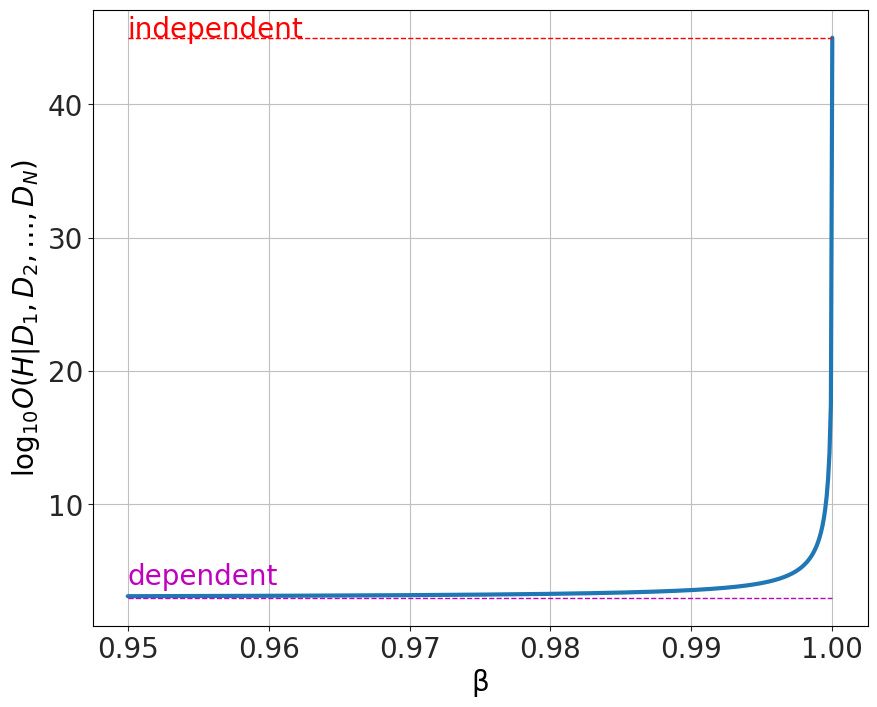

The question here is how much uncertainty can this argument tolerate? In other words, how far can \(\beta\) deviate from a value of 1 before the odds ratio comes down to a reasonable level?

A very rough, maximum level for the prior for the Resurrection of Jesus can be obtained using the following logic. There are 8 billion people on the planet, perhaps twice that much in all of history, and (at least for the Christians) only one supported Resurrection. So that would mean, whether or not one believes in the Resurrection, the prior should be no larger than 1 in 10 billion. This I think is the maximum possible value of a prior I'd consider. It is comparable to the naive model the probability of the sun rising which I discuss elsewhere, without taking into account anything we have come to understand from science so is clearly an extremely conservative upper bound.

We want to know where the first part of the odds ratio,

drops below \(10^{10}\) so that the prior odds \(\frac{P(M)}{P(\bar{M})}\sim 10^{-10}\) will overwhelm it and the argument fails. This point can be seen by observing a plot of the log base 10-odds ratio as a function of β and noting where the value dips below 10.

This result surprised me. The tiniest deviation from the absolute certainty that all 15 sources are statistically independent brings the odds ratio down to the mundane. It drops below \(10^{10}\) at around \(\beta=0.9995\)!

This result surprised me. The tiniest deviation from the absolute certainty that all 15 sources are statistically independent brings the odds ratio down to the mundane. It drops below \(10^{10}\) at around \(\beta=0.9995\)!

What this means is that one can be supremely confident that all 15 sources are statistically independent, at probability of \(p=0.9995\) (which is far higher than many scientific claims in published journals), and still not be able to justify the miracle claim due to the small uncertainty. This fact alone should disqualify the result.

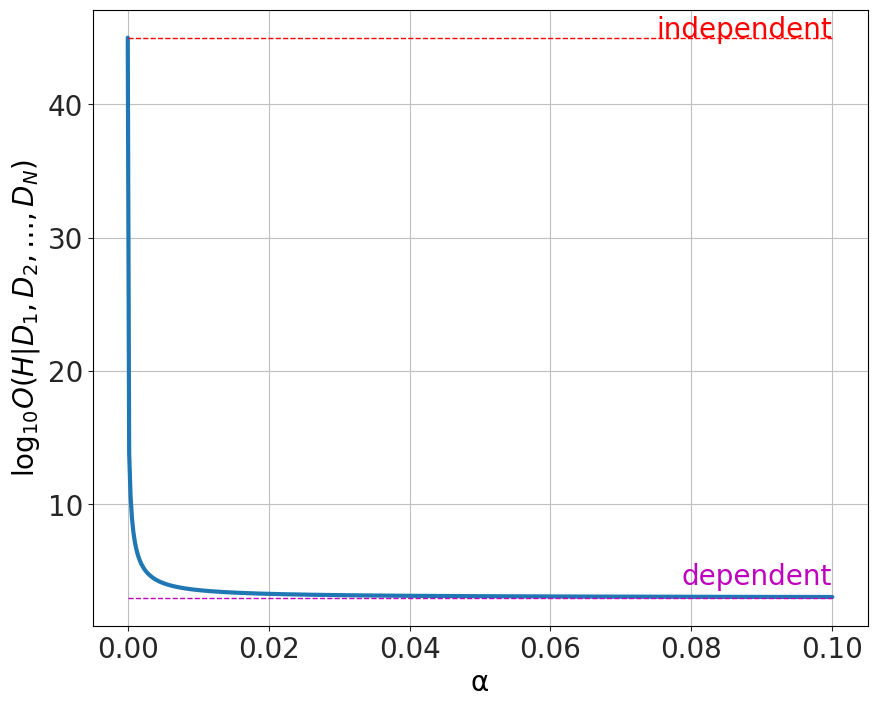

One could criticize this result by saying that the choice isn't between perfectly independent and perfectly dependence, but that one can be partially dependent but mostly independent and that may be enough. TLDR on this section -- you get the same result as the previous solution.

To handle this, we reparameterize the 2-data point case. Instead of the independent case \(P(D_2|M,D_1)=d\) or the dependent case \(P(D_2|M,D_1)=1\) we have a tunable version \(P(D_2|M,D_1)=d+\alpha (1-d)\) where \(\alpha\) measures how dependent each point is, from totally independent (\(\alpha=0\)) to totally dependent (\(\alpha=1\)). So each data point can be partially, but not totally, dependent. The \(n\)-data point generalization becomes

The question here is how far from the perfect independence can we have before things break. The result is the same as before,

The tiniest deviation from independence results in the argument completely collapsing.

As stated before, what this means is that one can be supremely confident that all 15 sources are statistically independent, at probability of \(p=0.9995\) (which is far higher than many scientific claims in published journals), and still not be able to justify the miracle claim due to the small uncertainty. This fact alone should disqualify the result.

Also, it speaks to the total naivety of the apologists calculations. They wrap their argument in seemingly technical and advanced Bayesian analyses, yet they don't seem to recognize that their argument comes down to assuming that testimony, evidence, and the scientific methods are identical to simple coin-flip experiments.

So when I hear things like this from Jonathan McLatchie

"To this question, I would point out that (contrary to popular notions) it is not necessary for a hypothesis to be able to make high probability predictions in order for it to be well evidentially supported. Rather, it is only necessary that the pertinent data be rendered more probable given the hypothesis than it would be on its falsehood." (post here)

I remind myself that this is a totally simplistic way of approaching probability problems, especially in the case of things as messy as human testimony and ancient documents.