Gravitational Attraction

What would happen if two people out in space a few meters apart, abandoned by their spacecraft, decided to wait until gravity pulled them together? My initial thought was that …

This is another in the series of "Statistics 101" examples solved with MCMC. Others in the series:

In all of these posts I'm going to use a python library I make for my science-programming class, stored on github, and the emcee library. Install like:

pip install "git+git://github.com/bblais/sci378" --upgrade

pip install emcee

In this example, like another one in the series, there is some true value, we call <math xmlns="http://www.w3.org/1998/Math/MathML"><semantics><mrow><msub><mi>x</mi><mi>o</mi></msub></mrow><annotation encoding="application/x-tex">x_o</annotation></semantics></math>. The <math xmlns="http://www.w3.org/1998/Math/MathML"><semantics><mrow><mi>N</mi></mrow><annotation encoding="application/x-tex">N</annotation></semantics></math> data points we have, <math xmlns="http://www.w3.org/1998/Math/MathML"><semantics><mrow><mo stretchy="false">{</mo><msub><mi>x</mi><mi>i</mi></msub><mo stretchy="false">}</mo></mrow><annotation encoding="application/x-tex">\{x_i\}</annotation></semantics></math>, consist of that true value drawn from a particular distribution, in this case the Cauchy distribution:

<math display="block" xmlns="http://www.w3.org/1998/Math/MathML"><semantics><mrow><mi>P</mi><mo stretchy="false">(</mo><mi>x</mi><mi mathvariant="normal">∣</mi><mi>μ</mi><mo separator="true">,</mo><mi>γ</mi><mo stretchy="false">)</mo><mo>=</mo><mfrac><mn>1</mn><mrow><mi>π</mi><mi>γ</mi></mrow></mfrac><mrow><mo fence="true">(</mo><mfrac><msup><mi>γ</mi><mn>2</mn></msup><mrow><mo stretchy="false">(</mo><mi>x</mi><mo>−</mo><msub><mi>x</mi><mi>o</mi></msub><msup><mo stretchy="false">)</mo><mn>2</mn></msup><mo>+</mo><msup><mi>γ</mi><mn>2</mn></msup></mrow></mfrac><mo fence="true">)</mo></mrow></mrow><annotation encoding="application/x-tex"> P(x|\mu,\gamma)= \frac{1}{\pi\gamma}\left(\frac{\gamma^2}{(x-x_o)^2+\gamma^2}\right) </annotation></semantics></math>

This distribution has a number of applications, many of which occur in cases of circular geometry. I was introduced to it with the so-called lighthouse problem and E T Jaynes's excellent Confidence Intervals vs Bayesian Intervals paper.

Although this distribution has a single-peak with a central value, <math xmlns="http://www.w3.org/1998/Math/MathML"><semantics><mrow><msub><mi>x</mi><mi>o</mi></msub></mrow><annotation encoding="application/x-tex">x_o</annotation></semantics></math>, and looks vaguely Gaussian, it has many mathematical properties which make it much different. For example, it has an undefined mean and variance, and thus doesn't satisfy the conditions for the central limit theorem. As such it is never covered in introductory statistics classes. However, with the computational approach described here, it is no harder to work with the Cauchy than the Normal.

In E T Jaynes's example, the data consist simply of two data points: <math xmlns="http://www.w3.org/1998/Math/MathML"><semantics><mrow><mo stretchy="false">{</mo><msub><mi>x</mi><mi>i</mi></msub><mo stretchy="false">}</mo><mo>=</mo><mo stretchy="false">{</mo><mn>3</mn><mo separator="true">,</mo><mn>5</mn><mo stretchy="false">}</mo></mrow><annotation encoding="application/x-tex">\{x_i\} = \{3,5\}</annotation></semantics></math>. Remarkably, frequentist methods can't address this problem. The likelihood is set in the same way as previous posts:

def lnlike(data,x_o,γ):

x=data

return logcauchypdf(x,x_o,γ)

data=array([3.,5])

model=MCMCModel(data,lnlike,

x_o=Uniform(-50,50),

γ=Jeffreys()

)

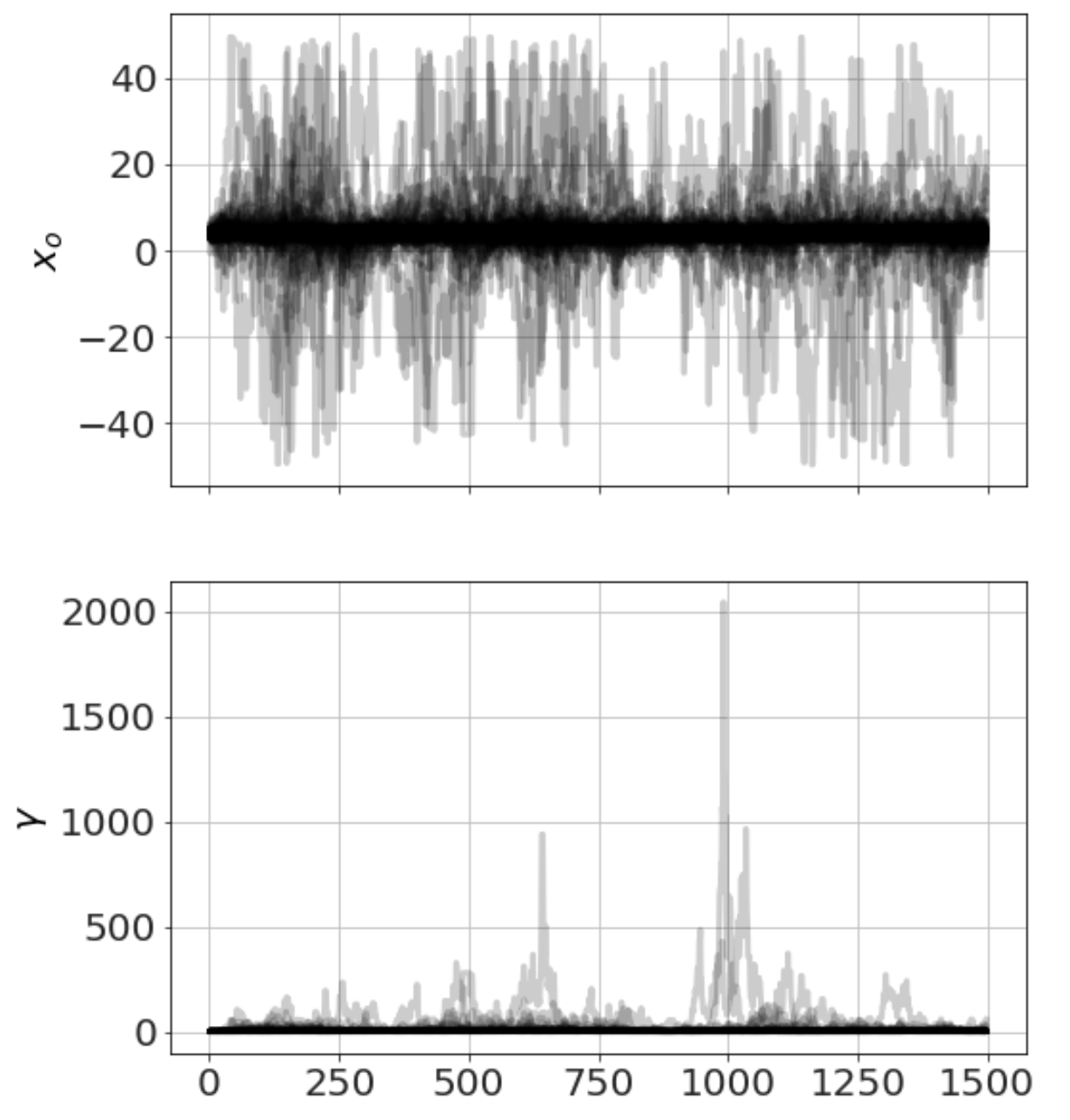

Now we run MCMC, plot the chains (so we can see it has converged) and look at distributions,

model.run_mcmc(1500,repeat=3)

model.plot_chains()

Sampling Prior...

Done.

0.43 s

Running MCMC 1/3...

Done.

3.74 s

Running MCMC 2/3...

Done.

3.61 s

Running MCMC 3/3...

Done.

3.66 s

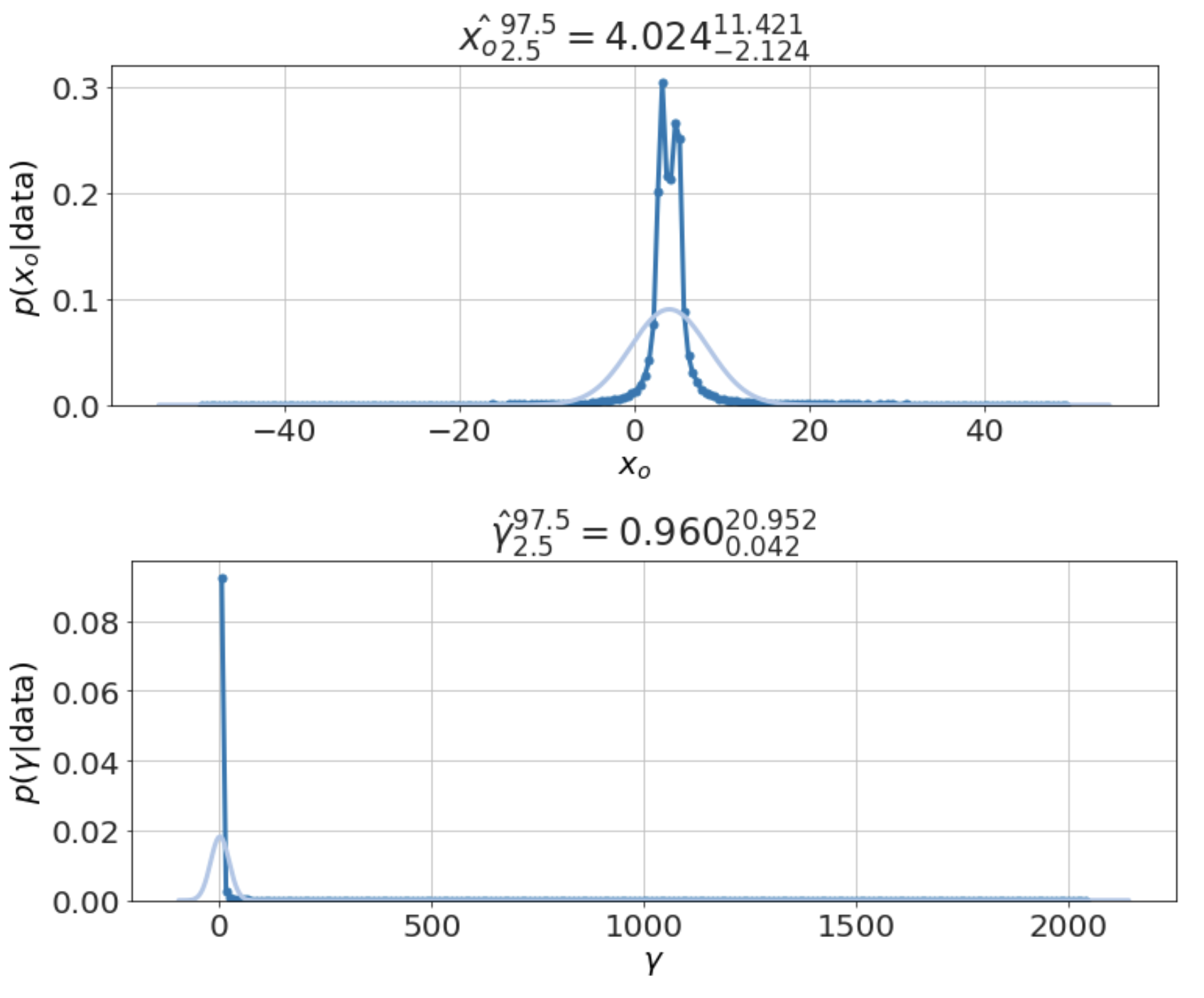

model.plot_distributions()

Although the Cauchy has no defined mean value, one can show that the median of the data is a best estimate for the central value, <math xmlns="http://www.w3.org/1998/Math/MathML"><semantics><mrow><msub><mi>x</mi><mi>o</mi></msub></mrow><annotation encoding="application/x-tex">x_o</annotation></semantics></math> -- although that doesn't give the uncertainties. Thus, the estimate we find above, <math xmlns="http://www.w3.org/1998/Math/MathML"><semantics><mrow><msub><mi>x</mi><mi>o</mi></msub><mo>∼</mo><mn>4</mn></mrow><annotation encoding="application/x-tex">x_o \sim 4</annotation></semantics></math>, matches what we intuit to be the correct answer.

The point here, however, is that the same Bayesian methods for finding the best estimates and the uncertainties works for this case like all the others -- with very little extra work -- whereas traditional methods fail. It is telling that statistics textbooks uniformly ignore this entire distribution as far as I can tell just to avoid the problems with it. The Bayesian approach, in contrast, provides a uniform procedure to address all such problems and that procedure consistently produces useful results.